Using artificial neural networks, the Russian research institute translates it into images in real time

This is the stepping stone to develop an efficient brain - computer interface in the future.

Researchers from two places, the Russian organization Neurobotics and the Moscow Institute of Physics and Technology (MIPT), have just announced a new way of reading brain waves and have come out with surprising results: when the human eye continues. Receiving images, the signal will be transmitted to the brain, scientists use neural networks to read that signal and immediately translate it (in real time) to see what the image looks like. The team published the report on bioRixv and included a video showing the operation of the brain signal reading system.

Scientists have just announced a new way to read brain waves and produce surprising results.

To develop a brainwave reader, we need to understand how the brain encodes information. One of the research methods is to monitor brain activity when the eye receives visual information, such as when a person is watching a video.

Currently, data translation solutions from brain signals include magnetic resonance imaging (MRI) or signal analysis from devices attached to the head / brain. Both approaches are limited in both research and real life applications.

The brain-computer interface of MIPT and Neurobotics relies on an artificial neural network and electroencephalography (EEG) to record brain waves through electrodes placed on the test human scalp. By analyzing the activity of the brain, the system that recreates the image that the EEG person is looking at.

According to Valdimir Konyshev, head of the Neurobotics Laboratory at MIPT, the device serves two purposes:

- Focusing on developing the computer - brain interface to help the disabled to control the exoskeleton - exoskeleton, regain part of the lost motor ability.

- Their highest goal is to increase the ability to control the human brain in the future.

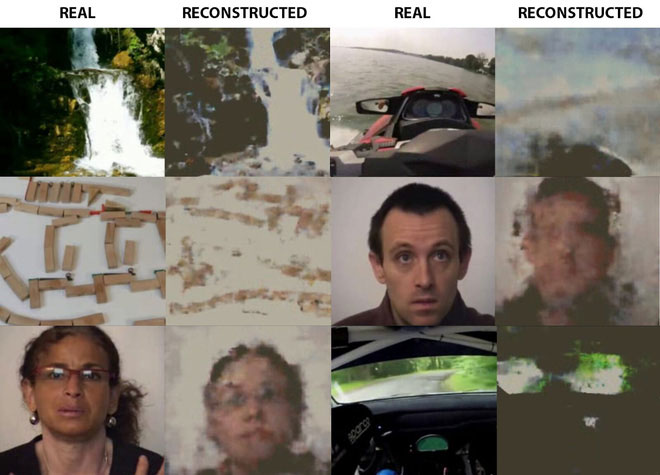

The algorithm of Russian scientists analyzes brain signals to "translate" into images.

In the first part of the experiment, neuroscientists asked participants - people who are physically and mentally healthy - to watch a 20-minute video, a combination of 10-second short clips of many videos. YouTube is different. They selected five types of videos - abstract shapes, waterfalls, human faces, motion mechanisms, and motor-vehicle related sports - more specifically, the first-person video of a jet ski, snowmobiles, motorbikes, and cars.

By analyzing the EEG data, the team divided brain waves corresponding to each type of video the participants were watching. They then analyzed the brain's response in real time.

In the second phase of testing, the researchers randomly selected 3 out of 5 video categories mentioned above. This is where artificial neural networks come in. They analyze the EEG and the disturbances from the brain signal to create images. The team trains the two automated systems to work in parallel, which can turn an EEG signal into a real-time image - the images the test participants are viewing.

The algorithm process translates images from brain signals.

To test the ability to create images from the brain activity itself, participants were allowed to watch videos of the same category as the previous examples, but with different content. While they were watching, the device recorded the EEG signal and sent it to the neural network for processing.

The test results were convincing enough, the machine passed the test: 90% of the images it produced were clear enough to be classified.

' EEG is a collection of brain signals measured from the scalp. Researchers still think that studying the brain activity via the EEG is similar to studying the operation of a steam engine by analyzing the smoke that the engine emits. '' Research author, researcher at MIPT and Neurobotics programmer, Grigory Rashkov said. ' But we did not expect that [the signal] had enough information to reproduce the image being watched live by test participants. It turned out to be possible . '

' Moreover, we can use the results of this research as a premise for future brain-computer interface development. With the current technology, the neon interface announced by Elon Musk is facing difficulties due to the complicated surgery process as well as the device itself due to oxidation. We hope that the new design will create a neural interface that does not require surgery to intervene deeply into the brain . '

- Development of artificial neural network 'true' by memory resonance

- 15 'super virtual' images are created from artificial neural networks

- The software recognizes the object and what is happening in the image

- Tool to determine how easy a photo is to remember

- What is artificial intelligence, deep learning, machine learning?

- Apple first published research on artificial intelligence

- Japan: Announcing real 3D images

- The invention could be

- Thanks to the 'synaptic' superconducting switch, the computer can operate like a human brain

- MIT has created a neural network for pizza analysis

- It's time to say goodbye to the black box: AI can explain itself to yourself

- Artificial intelligence understands lips movement

The US company is about to build a supersonic passenger plane of 6,000km / h

The US company is about to build a supersonic passenger plane of 6,000km / h Japan develops avatar robot as in fiction film

Japan develops avatar robot as in fiction film Australia tested the world's first mango picking robot

Australia tested the world's first mango picking robot America develops technology to separate water from animal waste

America develops technology to separate water from animal waste