Deepfake - the most dangerous weapon born in the digital age

The reverse of technology is more visible than ever: a deepfake video can inject misleading thoughts into people who don't understand it.

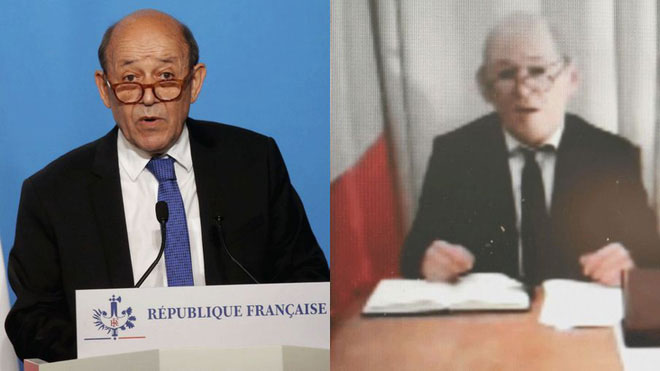

Not long ago, people flipped fraud tricks that seemed to only appear in the Mission Impossible or Scooby-Doo: a silicon masked by the French Foreign Minister - Mr. Jean-Yves Le Drian to make videos ask for money from billionaires. With the excuse of "redeeming hostage aid from France" , the scammer received tens of millions of dollars before being arrested.

With a blurred video, but with a compelling argument, one can easily make a million dollar trick. Now imagine a clear video, with a familiar face, carrying soft words on the video, how easy it is to transmit fake messages or carry out scams.

But this is the real concern: technology has grown to the extent that it is possible to fit a deceiver's image into the face of a well-known individual .

I am talking about video with deepfake technology - videos with a spokesperson are a familiar face: can be the head of a nation, a person with a social role and very respected, can it is your relative. With an image that is very familiar to you, the words coming from that character's mouth will suddenly be more convincing. Our nature is already there! I am familiar with what is familiar.

Explanation of 'deepfake', the top scams of the present moment

Initially, deepfake appeared as an extremely advanced porn content making tool, but its potential to stir up world security has not been properly considered.

When a sick person wants to look at the nude image of any object, they will look for photo editing tools or Photoshop hand-wranglers. But when the image moves, Photoshop is incompetent to satisfy the desire of the decadent .

. Until the machine learning power and neural network appear. With the ability to receive input data to analyze, learn the existing patterns and then produce the ultimate convincing final product, it becomes the most advanced tool of the porn industry .

It goes far beyond image editing tools when it comes to making porn videos, with a hot-packed face to match any individual who has a similar face. The first famous technology in this paragraph is deepfake, with 'deep' of the word 'deep learning' and 'fake'.

Deepfake - Fake toys combine with the power of machine learning to become fake 1 goods, things like the most genuine and relatively high quality.

What can Deepfake do?

As many times have warned, fake news is a form of spreading extremely dangerous information. But with a bit of awareness, by being more cautious in receiving information, by 5 minutes on Google search, we can avoid being led by the tip.

But few people find it hard to understand whether the monologue from the video's mouth is fake, no matter how convincing they were at first. From the words to the mouth are all fake: with words that malicious content comes from a person (or a group of personal interests), until the video is also made by computer.

Uncanny valley is a term that describes your feeling when looking at this photo.In the picture is the robot Sophia.

At the present time, these fake videos look all weird. It gives the feel of the uncanny valley - the strange valley , the sense of disgust you feel when you look at an extremely human-like product, but doesn't give it a "human-like" feel at all. Moreover, the counterfeiting points are easy to recognize: the speaker's image is misleading at many points, there are image points that do not match the background, because the image processing power of the computer has not reached the range true.

What can we do?

Up to this point, there are two objective factors that make deepfake videos not as dangerous as their potential, just stop at 'pornography'. That is:

- Technology for making these content is still quite expensive. Although the speed of counterfeiting has improved significantly, to the scary level.

- Researchers are still trying to develop tools to detect fake content. Technology can create such disgusting things, technology will also find a way to detect and prevent it from spreading.

As for each individual, can only give advice about being alert when using the Internet, besides do not share and interact with malicious content. You are helping those who are behind to gain illicit profits and contribute to increasing the spread of new epidemics named deepfake.

- The weapon of death tongue licks people into charcoal

- How were biological weapons born?

- Startled, the monster used the corpse as a weapon of destruction

- The sword of light can become the most dangerous weapon

- The ultra-powerful weapons are 'covered' by the beautiful shell

- The library of digital books in Europe was born

- Special gun - The extremely powerful anti-terrorism weapon of Vietnamese Special Forces

- 10 experiences that babies born in 2018 will never have

- The supposedly useless immune cells are actually weapons against HIV

- Keep digital devices from overloading

- Garden of digital vegetables is unique in Japan

- New weapon against Russian pirates

The US company is about to build a supersonic passenger plane of 6,000km / h

The US company is about to build a supersonic passenger plane of 6,000km / h Japan develops avatar robot as in fiction film

Japan develops avatar robot as in fiction film Australia tested the world's first mango picking robot

Australia tested the world's first mango picking robot America develops technology to separate water from animal waste

America develops technology to separate water from animal waste