Google subsidiary introduces human-level AI

DeepMind, a British company owned by Google, is on the verge of achieving artificial intelligence (AI) as smart as humans. In a statement released on May 18, experts at DeepMind affirmed that the most difficult tasks to create an AI like a human have been solved.

The London-based AI company wants to build an "Artificial General Intelligence" (AGI) that can handle tasks like a human without any formal training.

Synthetic artificial intelligence

Nando de Freitas, a researcher at DeepMind and professor of machine learning at the University of Oxford, says the AI company has found a way to solve the toughest challenges in the race to achieve AGI.

AGI is a type of artificial intelligence that is capable of understanding or handling any task like a human without any formal training. According to De Freitas, the task of scientists now is to scale AI programs, which increase the data processing capacity and computing power to create a complete AGI.

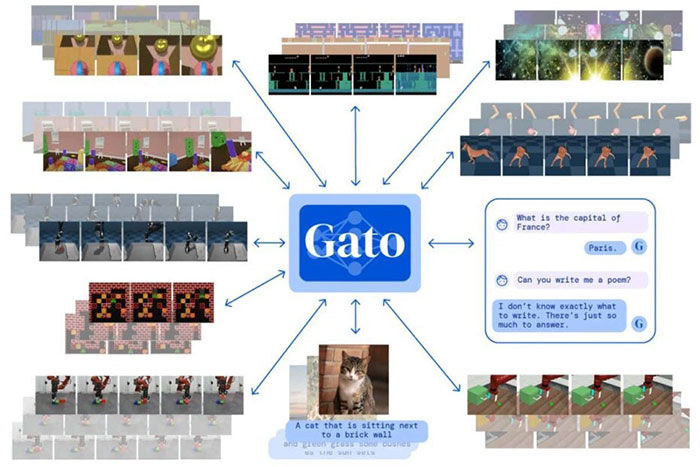

Deepmind claims Gato can perform more than 600 different tasks without machine learning.

Earlier this week, DeepMind revealed a new AI program called 'Gato' that can complete 604 different tasks.

Gato uses a single neural network - a computer system with interconnected nodes that behave like the neurons in the human brain. DeepMind says the AI program can talk, recognize images, stack blocks with a robotic arm or even play simple games.

There are many controversies

Responding to an opinion piece published on The Next Web stating that the current human race would never achieve AGI, Mr. De Freitas bluntly asserted that creating AGI was only a matter of time and scale.

"We've done that, and the mission is now to make these models bigger, safer, more computationally efficient, faster.," De Freitas said on Twitter.

However, he admits that humanity is still far from creating an AI that can pass the Turing test - a test of the ability to exhibit intelligent behavior of an equivalent or indistinguishable machine. with humans.

Many critics argue that Gato has yet to reach synthetic artificial intelligence (AGI).

Following DeepMind's announcement of Gato, The Next Web countered that AGI is nothing more than virtual assistants like Amazon's Alexa and Apple's Siri, which are now on the market and in people's homes.

'Gato's multi-tasking is like a console that can host 600 games, not a game you can play 600 different ways. It's not synthetic artificial intelligence, it's a bunch of AI that is pre-trained and then packaged," commented Tristan Greene, writer of The Next Web.

According to some other reviewers, Gato can handle hundreds of different tasks, but this ability can affect the quality of each task. Tiernan Ray, technology columnist for ZDNet, said that Gato doesn't really do many simple tasks well.

'On one hand, Gato can do better than a dedicated machine learning program at manipulating the robotic arm that stacks blocks. On the other hand, it proved to be quite awkward with simple tasks like recognizing images or talking,' commented Ray.

For example, when conversing with humans, Gato mistook Marseille for the capital of France.

"Its ability to talk to humans is also average, sometimes it even makes contradictory and meaningless statements," continued the editor.

In addition, the image recognition ability of this AI program is not really good. Gato wrote a caption for the photo that reads a man holding bread as 'man holding up banana for photo'.

Is it a danger?

Contrary to the limitations of the current level of technology, some scientists see AGI as a future threat that could accidentally or intentionally wipe out humanity.

Dr Stuart Armstrong at Oxford University's Institute for the Future of Humanity once suggested that one day, AGI will realize human inferiority and wipe us out.

He believes that machines will work at unimaginable speeds and eliminate humans to control the economy and financial markets, transportation, healthcare and more.

Will the vision of machines overthrowing humanity like in the movie Terminator happen?

The doctor warns that a simple instruction to AGI to 'stop human suffering' could be interpreted by supercomputers as 'kill all humans', because their language is so easily understood. wrong.

Before his death, Professor Stephen Hawking told the BBC: 'The development of complete artificial intelligence could mark the end of humanity'.

In a 2016 paper, DeepMind researchers acknowledged the need for a 'self-destruct button' to prevent artificial intelligence from attempting to 'topple humanity'.

DeepMind - founded in London in 2010 before being acquired by Google in 2014, known for creating an AI program that beat professional Go player Lee Sedol in a five-game match in 2014. 2016.

- Google introduces robot 'nightmare'

- Google fighting with age and death?

- How has Google changed our brains?

- Google introduces a customized home page in groups

- Google slipped - The inevitable story

- Special Google products and services

- How does Google Maps work?

- Happy 18th birthday of Google

- Google unveils a superior AI capable of self-learning 'true meaning'

- 11 facts about Google

- How to see the Moon, Mars with Google Maps

- Google changed Doodle surprise rotation for birthday

The world's first sexless AI voice

The world's first sexless AI voice This cool t-shirt will make you invisible to AI

This cool t-shirt will make you invisible to AI AI can predict personality only through selfie photos

AI can predict personality only through selfie photos The world-famous chess player lost to Golaxy before, artificial intelligence 'made in China'

The world-famous chess player lost to Golaxy before, artificial intelligence 'made in China'