10 of the worst failures of artificial intelligence in 2017

If Sophia is the best "sweet fruit" of artificial intelligence during 2017, Face ID's self-driving or failed car accidents are the unforgettable "bitter fruits" of artificial intelligence technology. .

From the smart speakers to chatbot or Face ID and virtual assistant-based chat applications, AI seems to have creeped into every corner of the technology market. .

According to Gartner report released in October 2017, AI is expected to create 2.3 million jobs in 2020. In which, China is a country that clearly expresses the desire to master AI to grasp Pioneering in the near future.

But the growth of AI does not always bring immediate success. To achieve today, AI has experienced a lot of failures, even serious mistakes. But since then, people have found a way to solve and improve AI better.

Below is a summary of 10 of the most severe failures of AI throughout 2017 that the technology community can consider as a lesson to improve the AI better in the future.

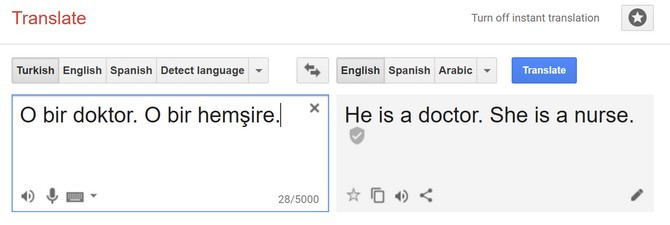

1. Google Translate demonstrates gender bias in the Turkish translation - English

Turkish has a pronoun that is "o" for a third person, without gender. However, this word has no meaning in English translation. Therefore, the AI system of Google Translate could not guess which pronoun wanted to talk about any subject.

According to Quartz, Google Translate used gender bias algorithms to interpret corresponding pronouns in English. As a result, Google's translation tool translates a neutral pronoun into "he" or "he" when the pronoun is placed in context with words like "doctor" or "hard working" and "she" or "she" for words like "lazy" and "nurse".

2.

Facebook researchers claim that two of the AI chatbot applications named Alice and Bob developed their own languages, while chatting with each other.

Although self-written languages may not be harmful at this time. However, Facebook believes that it is a form of abbreviation and there is a potential risk of losing control. Both chatbot Alice and Bob were wiped out after their conversations were detected.

3. Only the first day of the road had an accident

That is the case of a self-driving bus for the first time in Las Vegas, USA. In less than 2 hours of rolling on the road, the car unfortunately encountered an accident with a cargo truck suddenly turning from the warehouse to the road.

Local authorities said that the self-driving bus had no fault in this case because the sensor on the car was functioning correctly, ordering the vehicle to stop as soon as a dangerous situation was detected. However, many people believe that such accidents could not have happened if both vehicles had sensors to detect objects. In addition, in the above case if it is human handling, perhaps everything is different.

4. Google Allo suggests an emoji gun into an emoji Muslim man wearing a turban

For many users, Google Allo's smart response based on AI can be very interesting and convenient, thanks to the ability to respond quickly through text or commands. However, CNN suddenly found that it seems that AI support Google Allo is showing favoritism.

On Google Allo, just send a gun emoji icon, Allo can immediately give you 3 negative and biased emoji. It is the Dislike logo (pointing finger upside down), the knife and the picture of a man turban striking the Muslim men.

Immediately after discovering the above information, Google apologized to the user and confirmed that he would change Allo's algorithm.

5.

One week after the iPhone X was released, the world technology village was buzzing after the affirmation of Vietnam security company Bkav, even demonstrated the ability to hack Face ID (user authentication feature via face and based on AI) on an iPhone X with a mask costing about 150 USD.

Of course, Apple does not have an official answer after the incident. But once again, security technology in general and face recognition in particular still have many things worth discussing. In particular, AI needs to solve the maximum security problem before applying it in real products.

6. Wrong prediction in Kentucky Derby horse racing

After correctly guessing all 4 winning horses in 2016, the AI failed in the past year when it was impossible to predict which horse won the annual Kentucky Derby.

The AI only predicts 2 of the 4 horses to finish, even when guessing exactly the two horses on the finish line, the AI continues to guess the rank of the two horses wrongly. Obviously, horse racing is always a chance game but many people still put great faith in AI.

7. Alexa makes users suffer due to arbitrary turn on music

German police suddenly hit an apartment in one night in November 2017, after many neighbors accused the loud sound of coming out of this apartment early in the morning.

Funny, the music is not caused by the miserable size, but by the disastrous Amazon Echo smart speaker. Amazon's smart speaker has enabled music to turn on when the homeowner comes out. Interestingly, the police replaced the locks and the owner returned to call the locksmith to enter the house.

8. Google Home abruptly stops working in large numbers

Nearly all of the sudden Google Home smartphone models were inactive in June 2017. Users accuse the error when they try to communicate with the speaker. After receiving many feedbacks, Google also found a solution to the problem.

9. Google Home Mini arbitrarily follows the owner

In October, security researchers found that Google Home Mini smart speakers secretly turned on, recorded thousands of user conversations and sent them to Google.

The incident was only exposed when a user discovered the virtual assistant voluntarily turned on and was trying to listen to the TV. After checking the My Activity tool, this user discovered, the device was silently monitoring and recording him for a long time. Google then quickly found the cause and published the patch fix.

10. Facebook "releases" ads for Jews

Facebook uses AI to support operating the service platform, allowing companies and brands to buy demographic-based advertising.

However, an unexpected scandal was discovered in September 2017 when ProPublica disclosed, one of the demographic classification criteria related to people with racist or anti-Do views. Thai

The organization also found that advertisements were used to target specific people who were interested in fighting against Jews or being agitated by extreme Jewish ideas.

On Facebook, the company claims this is the result of the wrong algorithm and not human. However, Facebook promises to remove them to clean up the advertising environment.

- What is Artificial Intelligence? What is AI (artificial intelligence)?

- China wants to dominate global artificial intelligence

- IBM's artificial intelligence invented new perfumes

- The United States allows the use of artificial intelligence to diagnose diseases

- Artificial Intelligence detects 85% of network attacks, becoming smarter

- Artificial intelligence: Development history and future potential

- Artificial intelligence has learned how to create another artificial intelligence

- Admire the faces created by artificial intelligence

- Artificial Intelligence is ready to launch

- Artificial intelligence can predict when you die

- This AI system will create images from the input text

- Artificial intelligence defeated the European Go champion

The world's first sexless AI voice

The world's first sexless AI voice This cool t-shirt will make you invisible to AI

This cool t-shirt will make you invisible to AI AI can predict personality only through selfie photos

AI can predict personality only through selfie photos The world-famous chess player lost to Golaxy before, artificial intelligence 'made in China'

The world-famous chess player lost to Golaxy before, artificial intelligence 'made in China'