The fear of AI becoming a weapon to destroy humanity

The new report, commissioned by a US government partner, warns that AI can pose an "extinction-level threat to humanity", requiring a control threshold.

The report, obtained by Time , is called An Action Plan to Enhance Safety and Security in Advanced AI , which proposes an unprecedented, far-reaching series of actions and policies on artificial intelligence . If enacted, they could completely change the AI industry.

"Advanced AI development poses urgent and growing risks to national security," the report's introduction reads. " The rise of advanced AI and AGI has the potential to destabilize global security, in ways that are reminiscent of the use of nuclear weapons."

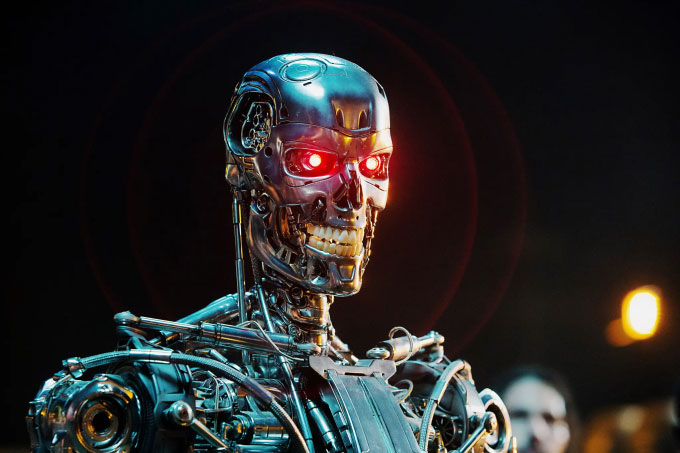

A robot from the movie "The Terminator". (Photo: Paramount).

Specifically, the report recommends that the US Congress should make it illegal to train AI models that contain more than a specific level of computing power, which means it is necessary to build a power threshold for artificial intelligence tools, to be established. controlled by a federal AI agency. This agency will be allowed to require AI companies to apply for government permission to train and deploy new models according to set thresholds.

Controlling the training of advanced AI systems above a certain threshold will help "regulate" competition between AI developers, thereby contributing to slowing down both the race to produce hardware and software. . Over time, the federal AI regulator could raise the threshold and allow better training of AI systems when it "feels sufficiently safe", or lower the threshold when "threats are detected" in AI models .

In addition, the report recommends that authorities should "urgently" consider controlling the publication of content or internal activities within powerful AI models. Violations can result in imprisonment. In addition, the government should tighten controls on the production and export of AI chips, and direct federal funding toward research to make advanced AI safer.

According to the filing, the report was conducted by Gladstone AI - a four-employee company that organizes technical briefings on AI for government employees - commissioned by the US State Department in November 2022. as part of a $250,000 federal contract. The report was sent as a 247-page document to the US State Department on February 26.

The US State Department has not commented.

Also according to the profile, the three authors explored the issue by talking to more than 200 government members, experts and employees at leading AI companies such as OpenAI, Google DeepMind, Anthropic and Meta. A detailed intervention plan was developed over 13 months. However, the first page of the report says "the recommendations do not reflect the views of the State Department or the US Government".

According to Greg Allen, an expert at the Center for Strategic and International Studies (CSIS), the above proposal may face political difficulties. "I think the recommendation is unlikely to be adopted by the US government. Unless there is some "exogenous shock", I think the current approach is difficult to change."

AI is currently developing explosively with many models that can perform tasks that were previously only available in science fiction. Faced with this problem, many experts have warned about the level of danger. Last March, more than 1,000 people, considered elites in the technology field, such as billionaire Elon Musk and Apple co-founder Steve Wozniak, also signed a letter calling on global companies and organizations. stop the super AI race for six months to jointly build a common set of rules for this technology.

In mid-April 2023, Google CEO Sundar Pichai said AI gave him sleepless nights because it could be more dangerous than anything humans have ever seen, while society is not ready for the rapid development of AI. .

A month later, a message signed by 350 people, leading leaders and experts in the AI industry, issued a similar warning. "Reducing the risk of extinction due to AI must be a global priority, alongside other societal-scale risks such as pandemics or nuclear war ," said a statement issued by the Center for AI Safety (CAIS) in San Francisco. Posted on the website in May 2023.

Stanford University's survey in April 2023 also showed that 56% of computer scientists and researchers believe that generative AI will transition to AGI in the near future. 58% of AI experts rate AGI as a "major concern" , 36% say this technology could lead to a "nuclear-level disaster". Some say AGI could represent a so-called "technological singularity" - a hypothetical point in the future where machines irreversibly surpass human capabilities and could cause threat to civilization.

"The progress in AI and AGI models over the past few years has been amazing ," DeepMind CEO Hassabis told Fortune . "I don't see any reason for that progress to slow down. We only have a few years, or at the latest, a decade to prepare . "

- 7 most common fears in the world! Try reading if you have a friend in it?

- Fear of confusion

- The first robot with citizenship that once said 'destroy humanity' is about to have more 'em'

- 6 ways to help you control fear

- 8 bizarre fears of humans

- Warning of future robots can destroy humanity

- Blood Extract - The most savage and mysterious weapon in Chinese history

- Ever wanted to destroy humanity, now robot Sophia says

- Strange fear syndrome that you may be suffering from but don't know how to call

- Fearing catastrophic climate change, scientists want to store all mankind's knowledge on the Moon's volcano

- 5 potential threats to destroy humanity

- White Phosphorus: The Terrible Weapon All Nations Fear

The world's first sexless AI voice

The world's first sexless AI voice This cool t-shirt will make you invisible to AI

This cool t-shirt will make you invisible to AI AI can predict personality only through selfie photos

AI can predict personality only through selfie photos The world-famous chess player lost to Golaxy before, artificial intelligence 'made in China'

The world-famous chess player lost to Golaxy before, artificial intelligence 'made in China'